PRS-Net: Planar Reflective Symmetry Detection Net for 3D Models

Lin Gao1 Ling-Xiao Zhang1 Hsien-Yu Meng2 Yi-Hui Ren1 Yu-Kun Lai3 Leif Kobbelt4

1Institute of Computing Technology, Chinese Academy of Sciences

2University of Maryland 3Cardiff University 4RWTH Aachen University

Accepted by IEEE Transactions on Visualization and Computer Graphics (TVCG)

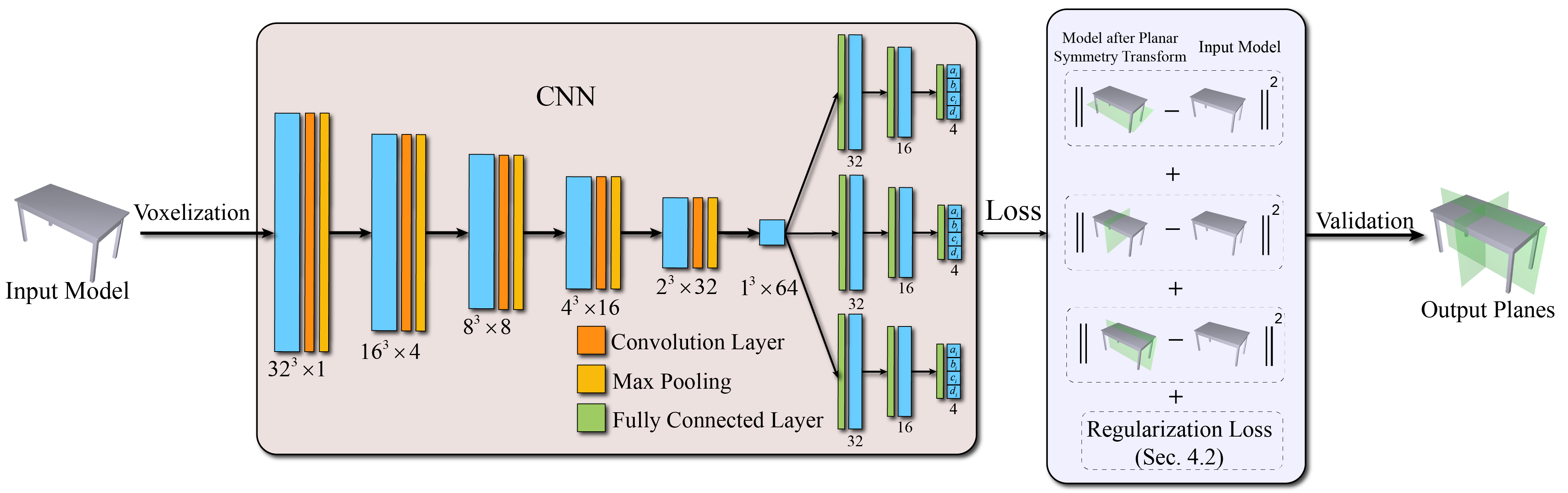

Figure: Overview of our method. The input to the network is the voxelized volume of the mesh model. A CNN is used to predict the parameters of the symmetry planes and the planar reflective symmetry transforms associated with the symmetry planes define the loss to train the CNN. This makes the training of CNN in an unsupervised manner without any labeled data. The regularization loss is used to avoid predicting repetitive symmetry planes. To simplify the network architecture, our network predicts a fixed number (three in practice) of symmetry planes and rotation axes, which may not all be valid. Duplicated or invalid symmetry planes are removed in the validation stage.

Abstract

In geometry processing, symmetry is a universal type of high-level structural information of 3D models and benefits many geometry processing tasks including shape segmentation, alignment, matching, and completion. Thus it is an important problem to analyze various symmetry forms of 3D shapes. Planar reflective symmetry is the most fundamental one. Traditional methods based on spatial sampling can be time-consuming and may not be able to identify all the symmetry planes. In this paper, we present a novel learning framework to automatically discover global planar reflective symmetry of a 3D shape. Our framework trains an unsupervised 3D convolutional neural network to extract global model features and then outputs possible global symmetry parameters, where input shapes are represented using voxels. We introduce a dedicated symmetry distance loss along with a regularization loss to avoid generating duplicated symmetry planes. Our network can also identify generalized cylinders by predicting their rotation axes. We further provide a method to remove invalid and duplicated planes and axes. We demonstrate that our method is able to produce reliable and accurate results. Our neural network based method is hundreds of times faster than the state-of-the-art methods, which are based on sampling. Our method is also robust even with noisy or incomplete input surfaces.

Paper

PRS-Net: Planar Reflective Symmetry Detection Net for 3D Models

Code

Results

Symmetry Detection

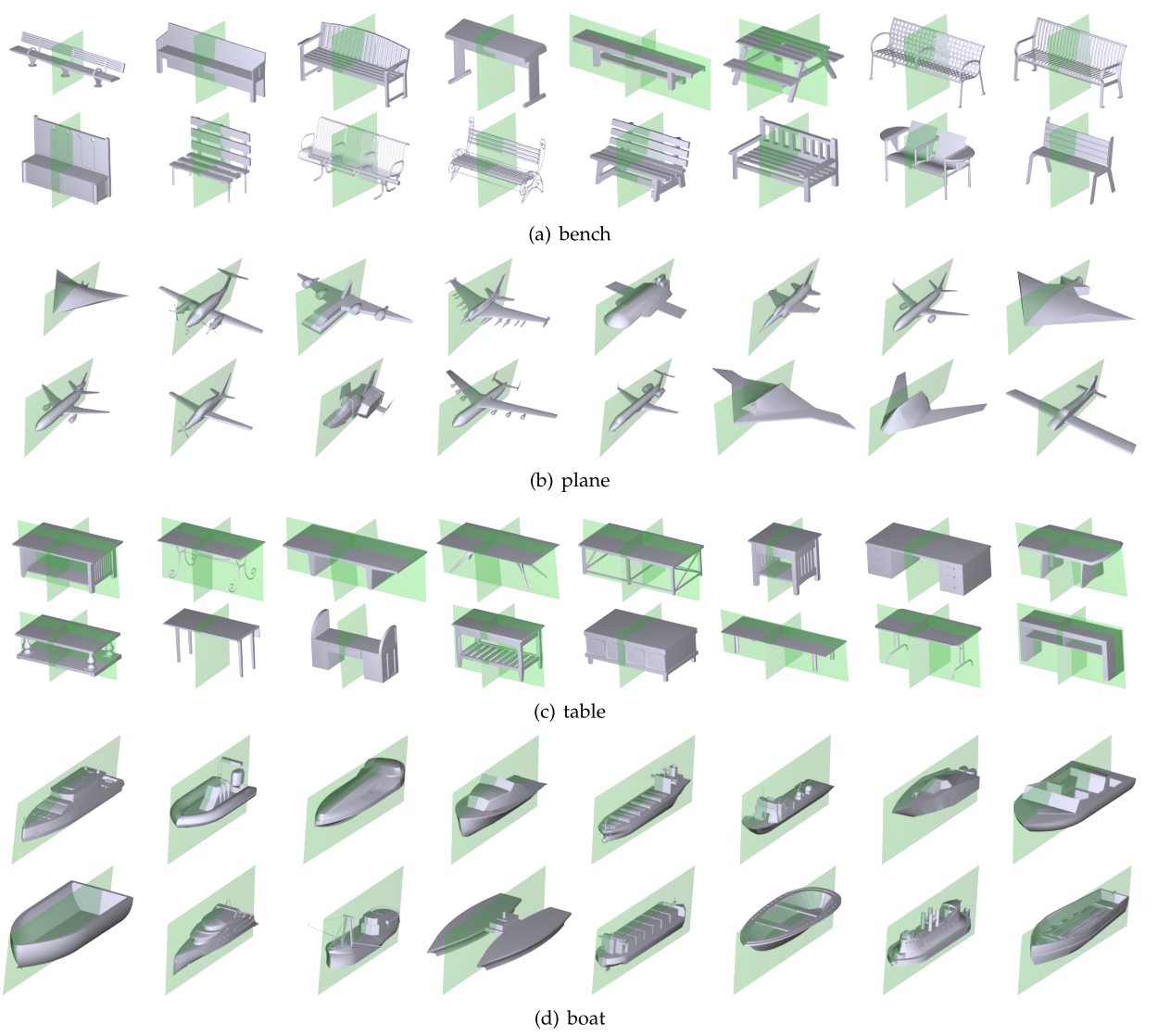

Figure: Results of symmetry planes discovered by our method of different shapes from test dataset in ShapeNet, including (a) bench, (b) plane, (c) table, and (d) boat.

Shape Completion

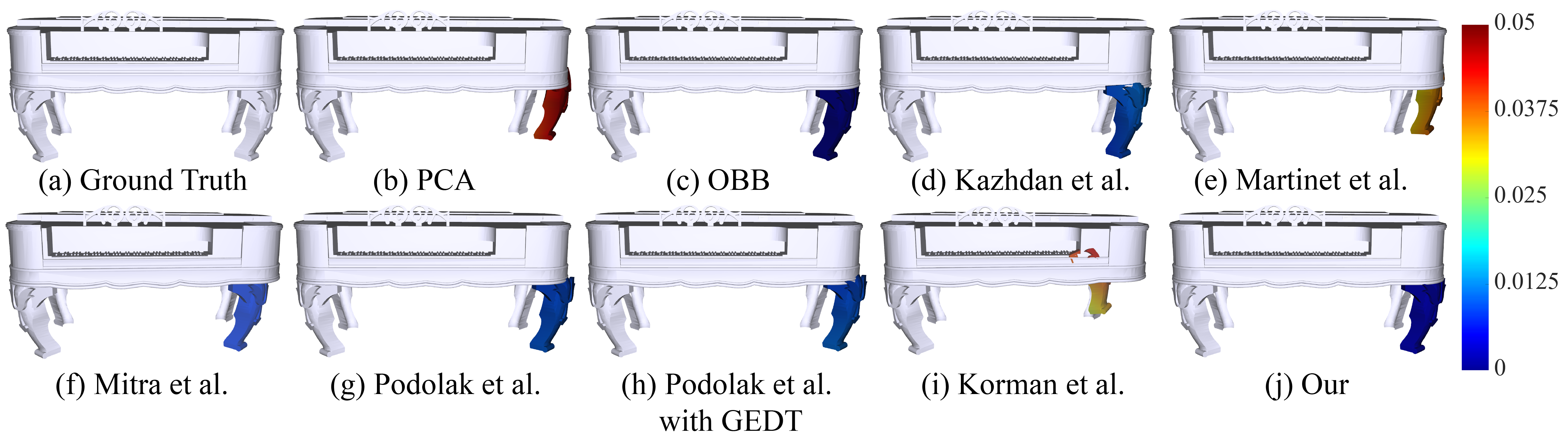

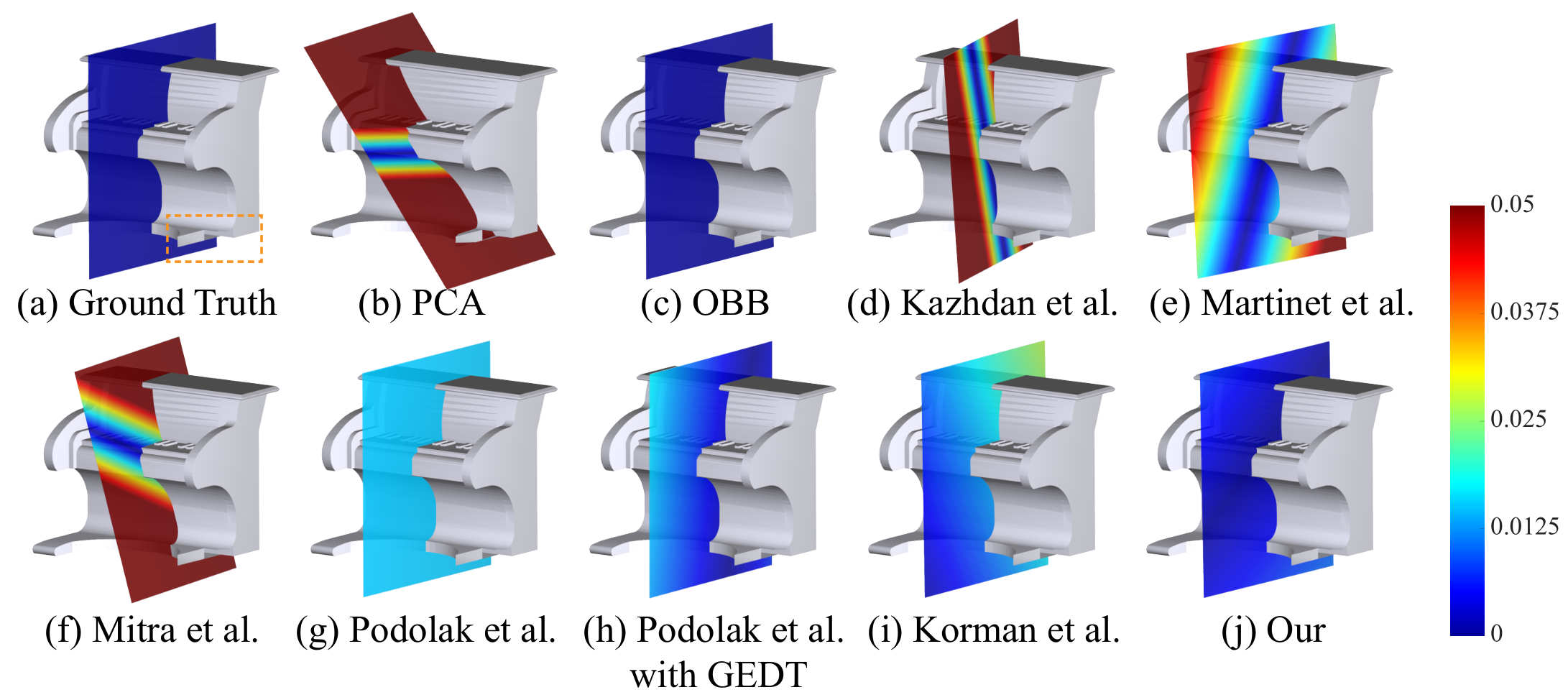

Figure: Our method repairs the incomplete shape perfectly due to the robustness and accuracy of our method. It shows the Euclidean error between the ground truth part and the generated part of the piano, which is obtained by mirroring the geometry of the left leg along the symmetry planes detected by different methods.

Robustness

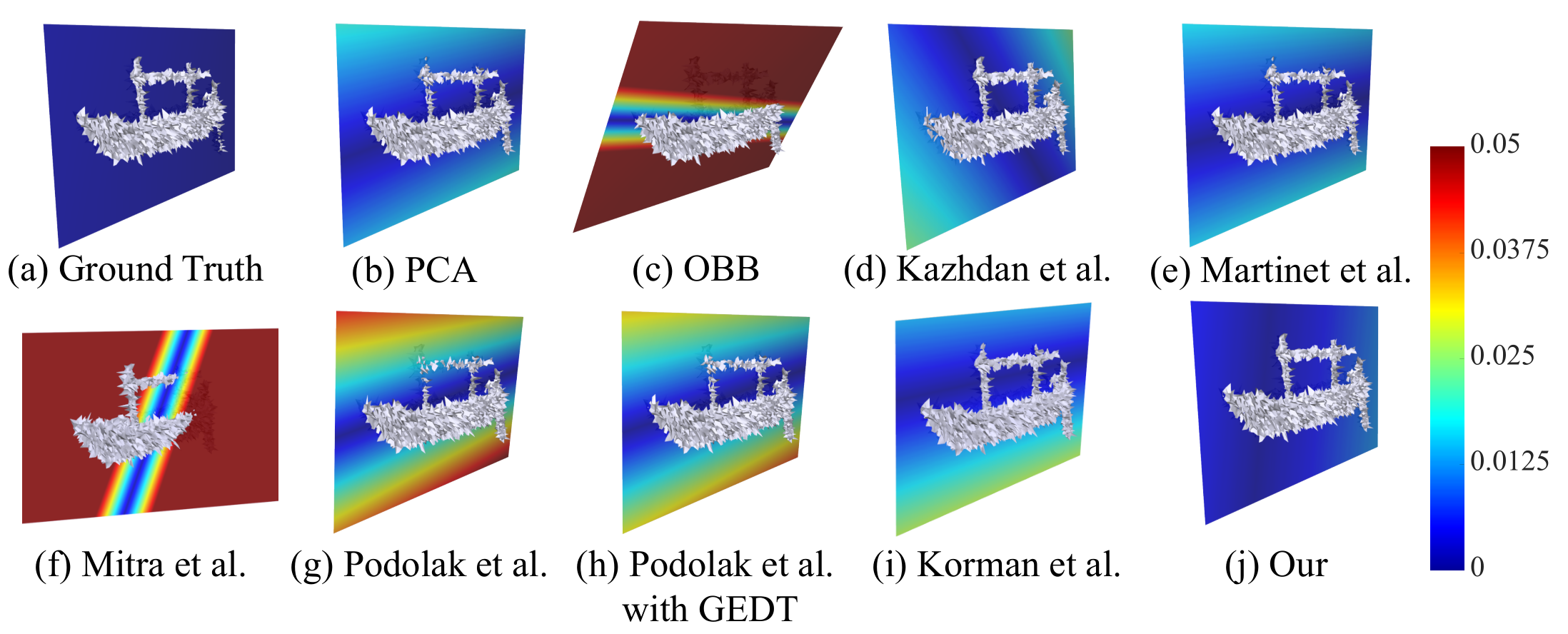

Figure: Robustness testing with adding Gaussian noise to each vertex along the normal direction. It shows that our method produces stable output.

Figure: Robustness testing by removing parts of the model. We remove the left leg of the piano and compare the error based on the complete model. This demonstrates that our network can also produce accurate results even when the input misses large partial models.

BibTex

author={L. {Gao} and L. -X. {Zhang} and H. -Y. {Meng} and Y. -H. {Ren} and Y. -K. {Lai} and L. {Kobbelt}},

title={PRS-Net: Planar Reflective Symmetry Detection Net for 3D Models},

journal={IEEE Transactions on Visualization and Computer Graphics},

year = {2020},

volume = {},

pages = {1-1},

number = {},

doi={10.1109/TVCG.2020.3003823}

}

|

Last updated on February, 2021. |