Automatic Unpaired Shape Deformation Transfer

Lin Gao1 Jie Yang1,2 Yi-Ling Qiao1,2 Yu-Kun Lai3 Paul L. Rosin3 Weiwei Xu4 Shihong Xia1

1Institute of Computing Technology, Chinese Academy of Sciences 2University of Chinese Academy of Sciences 3Cardiff University 4Zhejiang University

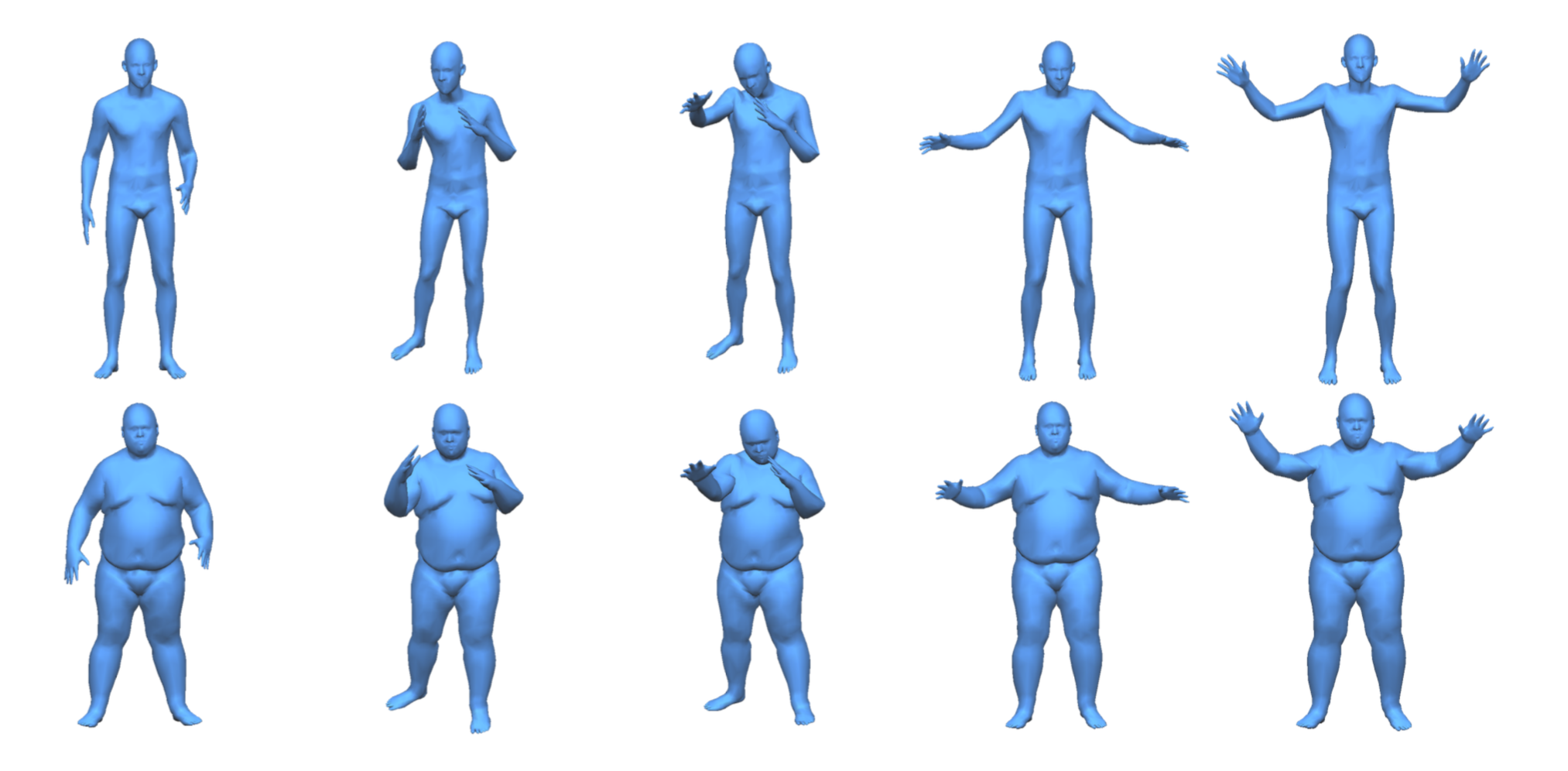

Figure: Deformation transfer from a fit person to a fat person, both from the MPI DYNA dataset [Pons-Moll et al. 2015]. First row: source fit person shapes, second row: our results of deformation transfer to a fat person. Our method automatically transfers rich actions across shapes with substantial geometric dierences without the need of specifying correspondences or shape pairs between source and target.

Abstract

Transferring deformation from a source shape to a target shape is a very useful technique in computer graphics. State-of-the-art deformation transfer methods require either point-wise correspondences between source and target shapes, or pairs of deformed source and target shapes with correspond- ing deformations. However, in most cases, such correspondences are not available and cannot be reliably established using an automatic algorithm. Therefore, substantial user effort is needed to label the correspondences or to obtain and specify such shape sets. In this work, we propose a novel approach to automatic deformation transfer between two unpaired shape sets without correspondences. 3D deformation is represented in a high- dimensional space. To obtain a more compact and effective representation, two convolutional variational autoencoders are learned to encode source and target shapes to their latent spaces. We exploit a Generative Adversarial Network (GAN) to map deformed source shapes to deformed target shapes, both in the latent spaces, which ensures the obtained shapes from the map- ping are indistinguishable from the target shapes. This VAE-Cycle GAN (VC-GAN) architecture is used to build the reliable mapping between the shape space. This is still an under-constrained problem, so we further utilize a reverse mapping from target shapes to source shapes and incorporate cycle consistency loss, i.e. applying both mappings should reverse to the input shape. Finally, a similarity constraint is employed to ensure the mapping is consistent with visual similarity, achieved by learning a similarity neural network that takes the embedding vectors from the source and target latent spaces and predicts the light eld distance between the corresponding shapes. Experimental results show that our fully automatic method is able to obtain high-quality deformation transfer results with unpaired data sets, comparable or better than existing methods where strict correspondences are required.

Paper

Automatic Unpaired Shape Deformation Transfer

Code

Supplementary

Additional Materials

Our Example Results

|

|

BibTex

author = {Gao, Lin and Yang, Jie and Qiao, Yi-Ling and Lai, Yu-Kun and Rosin, Paul L and Xu, Weiwei and Xia, Shihong},

title = {Automatic Unpaired Shape Deformation Transfer},

journal = {ACM Transactions on Graphics (Proceedings of ACM SIGGRAPH Asia 2018)},

year = {2018},

volume = {37},

pages = {To appear},

number = {6}

}

|

Last updated on September, 2018. |